The “Too Many Open Files” error occurs on Linux when the operating system has reached its file descriptor limit. A file descriptor represents every open file, socket, or network connection on the system. When it hits the limit, the operating system denies file operations, leading running programs to error.

Need headroom for busy workloads? If your current host caps you with conservative defaults, consider moving to a VPS that’s built for sustained concurrency. Check our AlphaVPS Cheap VPS plans, with KVM virtualization, full root, and fast disks—so you can tune file limits and kernel settings without fighting the platform.

This is an error encountered frequently on Linux VPS servers that make many simultaneous requests. This can include high-volume traffic websites, shared hosting environments and services supplied by Nginx, Apache, or MySQL, which can open and leave a lot of files or connections open simultaneously. If the limits are too low, the server will quickly reach it.

The error can expose critical performance issues that may lead to application crashes, failed database connections, and the website going offline. Quickly fixing open files for Linux VPS, keeps performance as steady as possible without server downtime.

What Causes the “Too Many Open Files” Error?

Linux employs file descriptors to keep track of files that are open, network sockets, and device handles. Every process and the system as a whole has limits on how many descriptors can be in use. When the process exceeds its limit, subsequent file operations will fail and this specific error will be triggered.

This usually occurs on busy servers with many connections at one time. Web servers may serve thousands of requests concurrently, databases keep open connections, and background jobs keep files open. Over time, the limits are tested until descriptors may reach zero.

The application code may be poorly written such that it makes this situation worse. Specifically, applications that read from files or sockets, but never close them, will slowly take up all available descriptors triggering resource exhaustion leading to instability of the system.

How to Check Current Open File Limits

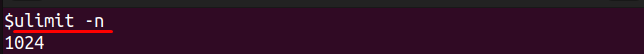

Linux provides commands to check how many files your system and users can open. Start by finding the per-user limit with:

This command shows the maximum number of open files allowed for the current shell session.

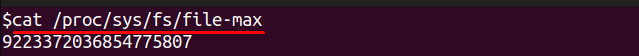

Next, check the system-wide limit for all processes with:

This displays the maximum number of file descriptors that the kernel allows across the entire system.

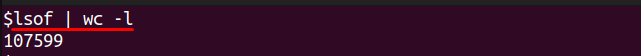

To see how many files are currently open, use:

This counts all open files and network sockets. Compare this number with the limits above to know how close you are to running out of file descriptors.

Temporary Fix: Increasing Open Files Limit

If you hit the open files limit, you can raise it temporarily for the current session. First, check your existing limit using:

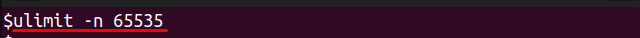

To increase it, run:

This sets the maximum number of open files to 65,535 until you close the shell or log out. You can pick a different value, but it must be higher than the current limit.

After running the command, confirm the change by running ulimit -n again. This method is helpful for quick troubleshooting but does not survive a reboot. For a long-term solution, you must update system configuration files.

Permanent Fix: Updating Open File Limits

A permanent fix requires changing system configuration so higher limits stay active after a reboot.

Edit /etc/security/limits.conf

Open the file with a text editor:

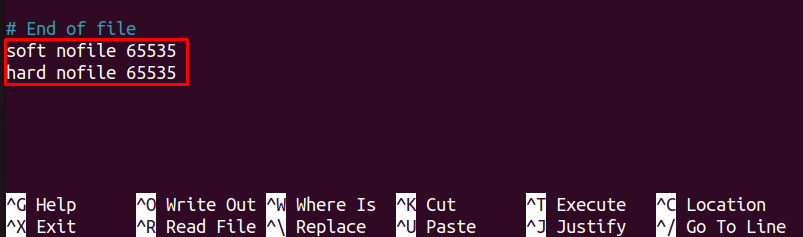

Add these two lines at the bottom:

The soft limit is what users get by default, and the hard limit is the maximum allowed. Save the file and exit the editor.

Enable PAM Limits

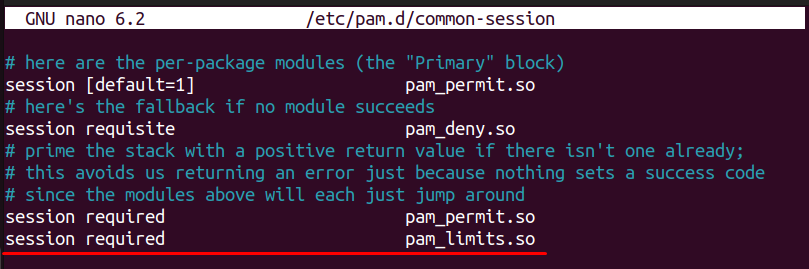

Check that pam_limits.so is loaded. Open these files:

Make sure both have this line:

Configure Systemd (If Your System Uses It)

Edit system-wide settings:

Add or update this line:

Save the files, then reload systemd:

Finally, restart the affected services or reboot the VPS to apply changes.

Optimizing Applications to Prevent Recurrence

Raising file limits solves the immediate issue, but preventing the error requires tuning the applications that open many files.

Web Servers

If you use Nginx, edit its configuration file:

Add or adjust these directives inside the events block:

Save the file and reload Nginx:

For Apache, open:

Add user-specific limits for the Apache user (often www-data):

Then restart Apache:

Databases

For MySQL or MariaDB, edit the configuration file:

Add:

Restart MySQL to apply changes:

Conclusion

The "Too Many Open Files" message indicates that your Linux VPS has hit its open file descriptor limits. Ignoring the message will likely crash your services, your database will blow up with connections, and your applications experience downtime. You can recover from the current limits by checking what they are, making a temporary fix to the limitations when needed, and configuring limits that will be permanent.

But it's important to realize that preventing this issue from happening again means more than just raising limits. Tuning your web servers, databases, and custom applications so they don't need to open too many open files at once should be an end goal. Furthermore, getting into the habit of monitoring your file descriptor usage will hopefully bring the issue to your attention before it becomes a problem that affects your users. That can lead to better performance of your VPS without performance hits.